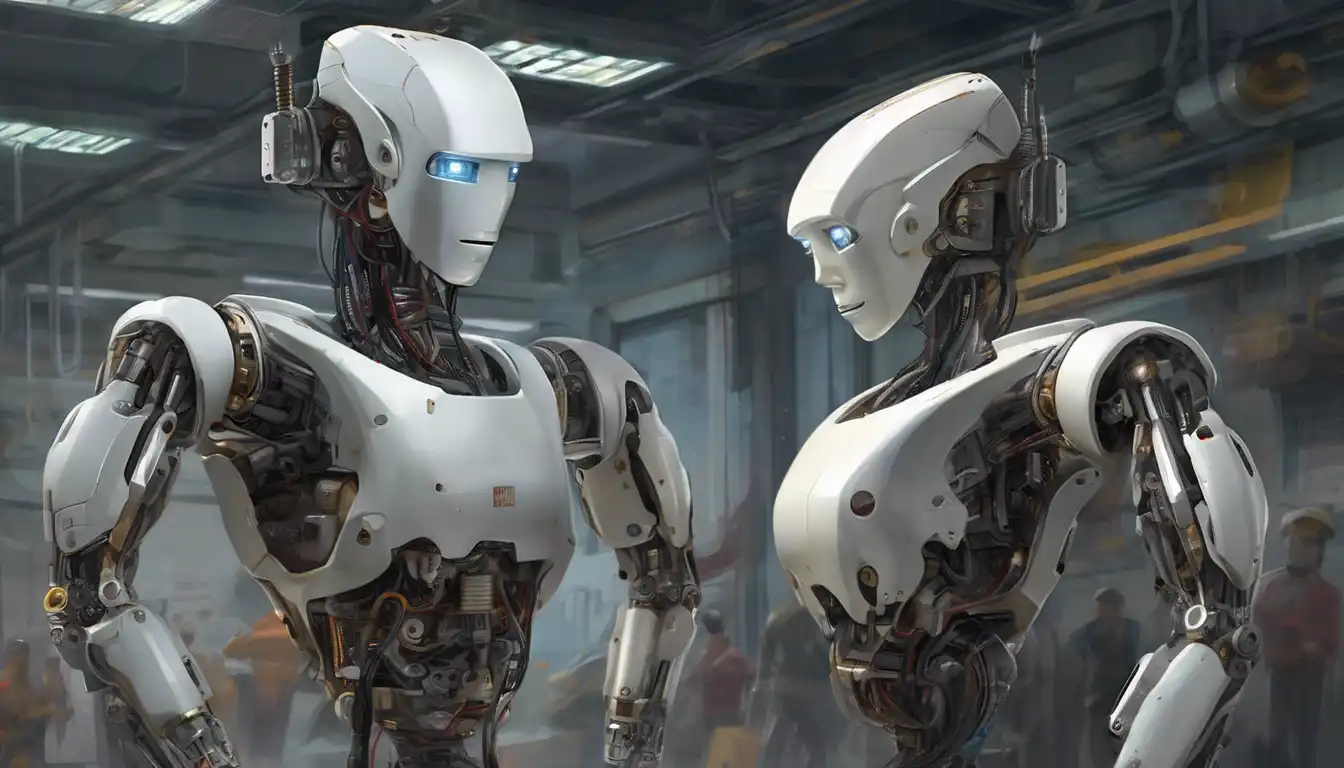

The Moral Dilemmas of Autonomous Robots

In the rapidly evolving world of technology, autonomous robots have become a cornerstone of innovation. However, their rise brings forth complex ethical questions that society must address. This article delves into the moral landscape of self-operating machines, exploring the implications of their use in various sectors.

Understanding Autonomous Robots

Autonomous robots are machines capable of performing tasks without human intervention. They rely on artificial intelligence (AI) and machine learning to make decisions, which raises questions about accountability and moral responsibility.

Ethical Considerations

The deployment of autonomous robots in fields such as healthcare, military, and transportation has sparked debates on several ethical fronts:

- Accountability: Who is responsible when an autonomous robot makes a mistake?

- Privacy: How do we protect individual privacy when robots collect data?

- Employment: What is the impact of robots on human jobs?

- Safety: How can we ensure that autonomous robots do not harm humans?

The Role of AI in Ethical Decision-Making

AI plays a pivotal role in how autonomous robots make decisions. The programming of ethical guidelines into AI systems is a topic of intense discussion. Should robots be programmed to follow strict ethical rules, or should they have the ability to learn and adapt their moral compass?

Future Directions

As we stand on the brink of a new era in robotics, it is imperative to establish ethical frameworks that guide the development and use of autonomous robots. Collaboration between technologists, ethicists, and policymakers is essential to navigate the moral dilemmas posed by these advanced machines.

For further reading on the impact of technology on society, explore our Technology and Society section.

In conclusion, the ethics of autonomous robots is a multifaceted issue that requires careful consideration. By addressing these ethical challenges head-on, we can harness the benefits of autonomous robots while minimizing potential harms.